Artificial intelligence used to be just a tool — a marvel of logic and programming. But now, it’s something else entirely. It’s a mirror that reflects what we want to see, a mask that hides the truth, and increasingly, a weapon reshaping how we perceive reality. The most unsettling part? It doesn’t even need to lie. It only needs to look true.

Today, generative AI can create strikingly believable images, voices, videos — even entire identities. We’re not just talking about spam or phishing anymore. We’ve crossed into a world where AI manipulates credibility — and does it so effortlessly, it’s scary.

AI-Powered Fraud: It’s Already Here

This isn’t a future problem — it’s a present one. Fraudsters across industries — insurance, finance, retail, even education — are using AI in ways we’re just beginning to grasp.

1. The Rise of Fake Refunds & Customer Scams

Online retailers are reporting a surge in returns featuring “proof” of damage. Crushed boxes, broken gadgets, misleading unboxing videos — all AI-generated. Sometimes, the products never existed at all.

We’re also seeing whole customer identities spun from scratch. Faces, email threads, shopping histories — fabricated with the click of a button. This isn’t just fraud by a few bad actors. It’s bot-driven and scalable.

2. Insurance Fraud Grows Up

Think about submitting a claim for water damage. Now imagine attaching a realistic photo of a flooded basement. But the photo? Fully AI-generated. No flood, no damage — just pixels. Overworked adjusters might not spot the difference. Neither will automated systems.

Some scammers go even further, using deepfaked phone calls to support their claims.

3. Synthetic Identities in the Financial World

Fake people are applying for real credit cards. They have resumes, tax histories, even references — all generated. These “ghosts” get loans, make transactions, and disappear. Good luck tracing them.

Why We’re Falling For It

The success of these scams isn’t about perfect tech. It’s about very human flaws.

- Confirmation Bias: We believe what fits our expectations.

- Visual Trust: If something looks real, our brain fast-tracks it as truth.

- Mental Fatigue: Under pressure, we don’t investigate. We just trust.

These mental shortcuts are evolutionary tools. Unfortunately, AI has figured out how to hijack them.

Forget Reverse Image Search — It’s Obsolete

Not long ago, if an image looked off, you’d reverse-search it. Maybe it’d show up on a stock site, and you’d call it a fake. Easy.

But AI-generated images don’t exist anywhere else. They’re not copied. They’re born from scratch. Reverse search gives you nothing. Silence. And suddenly, the absence of evidence becomes a kind of evidence in itself — falsely reassuring.

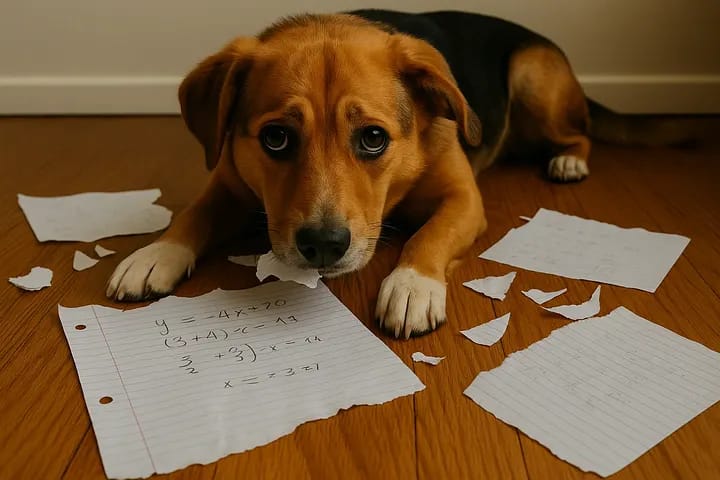

Want to fake a sick day? Generate a photo of a car crash in your own driveway. Need a refund? Create a dented box. Homework eaten by the dog? There’s an image for that too.

“Pics or it didn’t happen”? Try this: Pics because it didn’t happen.

Ethics Check: Fraud or Just Friction?

Let’s take a step back.

What if someone uses an AI image to claim a mental health day, just to get space they need? Is that deception — or self-care? What if a customer uses a fake photo to get justice from a company that ignored a real complaint?

The lines are blurring.

Plato warned us about mistaking shadows for truth. Baudrillard called them simulacra — copies without originals. Nietzsche might shrug: “There is no truth, only perspective.”

In this light, the biggest danger might not be AI itself — but our own willingness to stop questioning.

Real-World Cases: What’s Actually Happening

Here’s how it’s playing out:

1. Deepfake Ransom Calls

A CEO’s voice cloned to authorize a fund transfer. A mother gets a panicked call — her daughter’s voice, crying. None of it’s real.

- In the UAE: $35 million lost when a bank manager heard a familiar (but fake) voice.

- In Arizona: A parent nearly wired money after hearing her “daughter” begging for help.

2. Hyper-Personalized Phishing

Forget those old typo-ridden phishing emails. AI can now mimic your manager’s style, mention recent meetings, and include attachments that look totally legit.

These aren’t broad attacks. They’re precision-guided.

3. Romance & Crypto Cons

Scammers build AI-generated profiles, flirt on dating apps, and once the trust is deep, push a “can’t-miss” investment.

- In the UK: A woman was conned out of £350,000 by someone proposing via deepfake video.

4. Deepfake Celebrity Endorsements

Seen Oprah or Musk promoting a crypto scheme? Probably fake. These videos are crafted to look slick, credible, and urgent — everything needed to bypass your defenses.

So, What Can We Actually Do?

The answer isn’t to panic. It’s to adapt.

- Embrace Skepticism

Not paranoia — just pause. Verify before you trust. Make doubt part of the process. - Fight AI with AI

Deploy systems that recognize AI fingerprints. The same way spam filters evolved, fraud detection must step up. - Layer Trust

Don’t rely on one signal. Use metadata, timestamps, GPS, multi-channel verification. One image shouldn’t equal proof. - Update Your Ethics and Policies

HR, legal, and compliance teams need to revisit assumptions. Our rules were written in a pre-AI world. That world is gone.

Final Reflection

AI doesn’t just make content. It manufactures belief. And that’s the real power — and the real risk.

We used to say, “Seeing is believing.” But today? Seeing is just another prompt. And what you believe might depend on who told the story first.

So no, your dog didn’t eat your homework. But the picture says otherwise — and it fooled your teacher.

Now think bigger. What happens when that same tech ends up in the wrong hands?

When truth becomes optional, what’s left to protect?

Insights, strategy, and forward-thinking IT solutions.

Visit https://www.vyings.com